Bentham’s Bulldog Doesn’t Understand Complexity

Complexity isn’t just about making fewer assumptions or being more intuitive - it’s a well-defined mathematical concept

Lately my Substack feed has been featuring a lot of articles by Bentham’s Bulldog, in particular his writing on theism. As an atheist, a physicalist, and a big fan of computational and complexity theory, I’ve found myself disagreeing with virtually all of it (for reference, I’m an AI researcher with a bit of a background in computation complexity theory. I’m staying mostly pseudonymous at the moment but for background I work on one of the Gemini core modeling teams at Google). And since I’ve been wanting to take another stab at getting a newsletter off the ground, I figured I might as well restart this blog with a refutation of what he’s written.

The big problem I ran into, however (aside from my having no readers or audience) is that there simply is too much I disagree with. As much as I’d love to go point by point, his explainer on the “Anthropic Argument for Theism” is several thousand words, and it’s just one of many articles defending theism. I already have several different arguments outlined in my drafts folder, but I can’t see myself publishing any of them. It’s the bigger picture I object to, the starting axioms of the entire conversation, and that seems to get lost in piecemeal arguments.

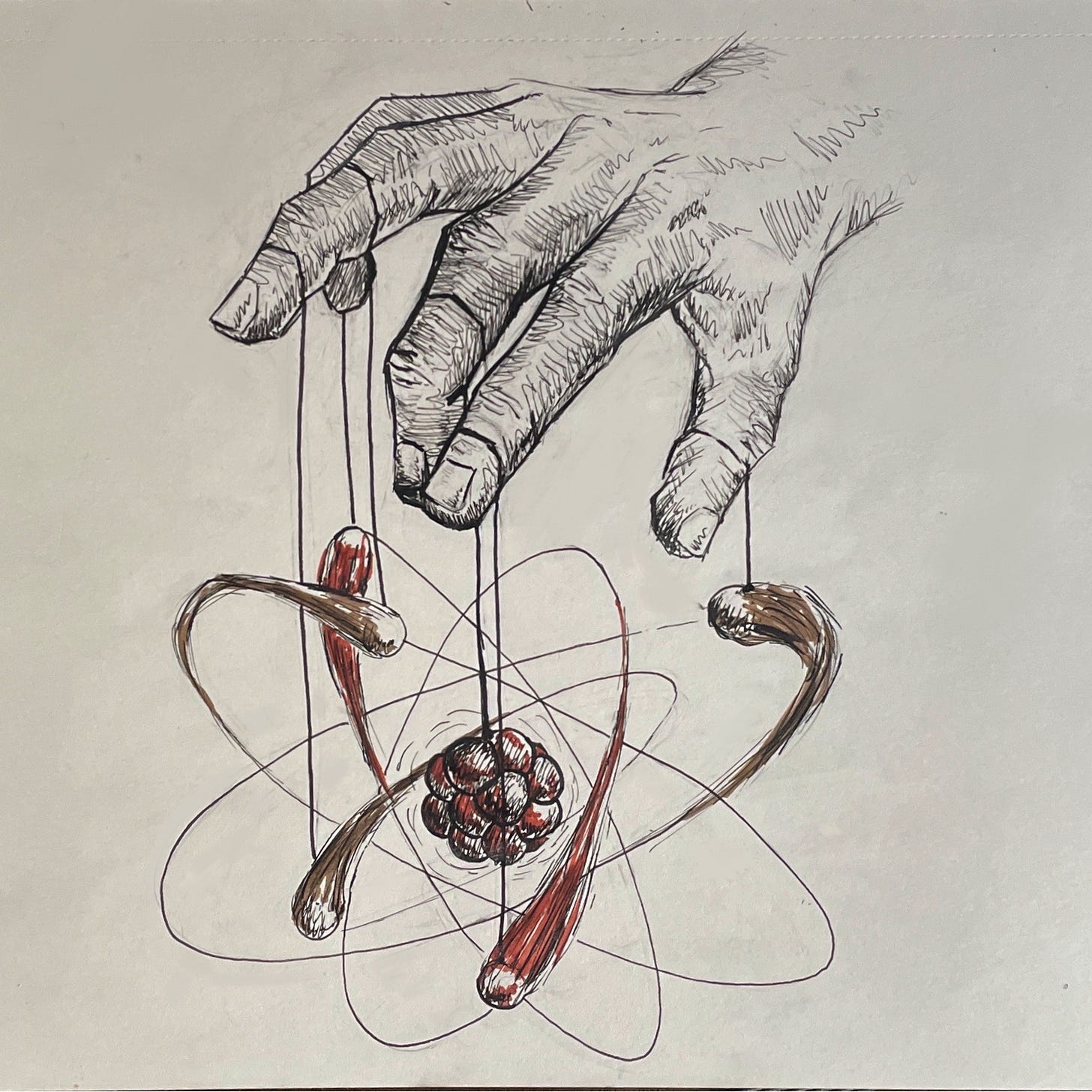

Instead, I’d like to reset the discussion slightly by picking one single idea and building it up from scratch. And the idea I want to discuss is Complexity: the question of whether a universe with a God is simpler or more complex than one without.

My goal is to try approach this as respectfully as possible, as I don’t really have anything against theism and generally don’t mind if others subscribe to it. Still, I personally find that it’s impossible for me to think about God and not conclude that parsimony demands I reject his existence.

And at this point I’ve read enough of Bentham’s writing that I’ve become convinced he hasn’t really understood this point of view.

The following comment responding to Bentham’s piece on simplicity illustrates this confusion the best. Commenter Warty Dog writes:

some naturalists came up with great definitions of what it means to be simple, in terms of the length of description of a turing machine implementing something. it seems hard to fit the above thoughts in a similar framework

The definitions and framework being referenced is a concept in algorithmic complexity theory called Kolmogorov complexity, or just K Complexity for short. In my opinion, it’s by far the most natural way to measure complexity, and I know Bentham doesn’t truly understand its weightiness or its nuance, because this was his response:

Well, you want description length in an ideal language. You can easily describe God in an ideal language in two symbols--one for limitless the other for mind!

There are several significant misunderstandings in this response, mainly around what “language” means when we’re talking about algorithmic complexity: language aren’t just whatever you want them to be, and “describing” is a technical term that cannot be circumvented just by loading more meaning into fewer words. And I know it’s just one random comment on one random article, probably one Bentham didn’t even put a lot of thought into, but I think it betrays a deeper misunderstanding of the arguments being leveled against him.

So the rest of this post will be an explanation of K complexity. Hopefully Bentham or someone like him will read this and, if nothing else, come away with a better understanding of what complexity really means and why atheists keep harping on it. And if I’m lucky, maybe when it’s done it will also become clear why, if the notion of God is in any way beholden to the laws of mathematics and reason, we must conclude that a universe with a God is significantly more complex than one without.

What Complexity Is

As I said, there are a few different types of complexity; the flavor that I’ll be talking about is known as Kolmogorov complexity (or simply just K complexity), which roughly defines the complexity of a thing as the length of the minimum code required to program it (in some programming language). Intuitively, it’s basically just a measure of “how hard is it to explain how to make the thing?” - note the phrase “how to make;” that’s a very specific aspect of what we’re measuring. The point of K complexity is that the difficulty of creating a thing and describing precisely what it does in every situation is the best way to really measure how complex it is.

One big point I want to make up front: “code” here is a proxy for the notion of precise mathematical description. I’ll talk more at the end about what it means to code God or the universe, but for now I’d like to just explain the math in the most generic sense.

Some of the people reading this may already be familiar with K complexity, as it’s basically the foundational concept of a field called algorithmic complexity theory. For the rest, there’s a nice little easy-to-understand intro on Wikipedia. The example they use are the strings abababababababababababababababab and 4c1j5b2p0cv4w1x8rx2y39umgw5q85s7. The first one can be coded as ‘ab’ * 16, since it’s just the same two letters repeated 16 times. The second one, however, has no structure and can’t be simplified, so the only way to generate it is just code that spits out 4c1j5b2p0cv4w1x8rx2y39umgw5q85s7. From this we can see, in a way that hopefully matches our intuition, that the first string is much simpler.

What is not necessarily intuitive is that sometimes things that appear to have a lot of structure can be quite simple. Fractals are the classic example of this, where a very simple equation drawn at a bunch of points creates images that appear to have a lot of complex structures. Not all things are like fractals, though, and sometimes things that seem complex just are complex. A fully-trained LLM like ChatGPT, for instance, is probably going to require hundreds of gigabytes of code to create, one way or another.1

None of this is really all that tricky. The only real subtlety is that when we’re talking about the K complexity of something, the language we use has to be able to describe how to build the thing in question completely.

Which is to say, we cannot simply use natural language. Rather, our language must be so fundamentally precise that it is impossible to be vague even if you wanted to.

That is why K Complexity is defined with respect to programming languages. Because programming requires you to explicitly describe every piece of your system, and do so with specific instructions that will be executed exactly how they are written, the same exact way every time.2

This is because programming languages aren’t so much expressing ideas, as they are listing procedures. To the extent that programming languages do express ideas at all, it’s by building them in an extremely bottom-up fashion. You start with a few basic things you can do - “multiply these two numbers”, “store this variable here”, etc - and you compose them one on top of the other in order to do the thing you want to do.

Think of it like building a car from scratch: first I’d have a procedure for making basic things like screws and wires, then I’d use those basic components to build larger components like the engine or doors, then I’d put those together until I had a car. And if there were a step I didn’t know how to do from scratch - like building an exhaust system - then I wouldn’t have an exhaust system, and unless I came up with some alternative I simply wouldn’t be able to build a car.

Compare this to an informal language like English. In English, I can just say “car” and everyone knows precisely what I mean. I don’t have to explain or in any way already understand the gorey details of how a car is made. By its very construction, informal language allows you to have arbitrarily large gaps in your reasoning. And because there’s no hard and fast requirement for specificity it becomes easy to make mistakes or skip steps without noticing. Or, as in the case of “god” or “the universe”, it makes it very easy to describe an extraordinarily complex thing in only a few words.

Which brings me to the first reason Bentham is wrong: complexity isn’t about how hard something is to say, it’s about how much work goes into describing the procedure to create it - every single aspect of it, in a way so clear that a mindless computer can follow your instructions exactly and deterministically produce precisely the exact required output.

What’s in a word?

Having established the need to “build” ideas from the bottom-up, we’ve still left open the possibility of loop holes. We haven’t really defined precisely what the most basic building blocks (what you might call “the atomic operations”) of our code must be. Sure, “multiply two numbers” might be an example of a basic building block, but why couldn’t we just have another building block which is just “be omniscient.” Maybe when it’s time to discuss the complexity of God, we could just write the code in a special programming where “God” is just a single line? That’s basically what Bentham was suggesting when he said that we could imagine an idealized language where God is just two words.

It’s actually not an unreasonable suggestion at all. Many programming languages have built-in operations that can do complex things with just a line of code. Sorting a list, for instance, is a fairly complex operation, but in Python I can do it efficiently with just a single command. We could very easily imagine a language that also compresses all of God’s complexity into a single command.

But that wouldn’t save us. The reason why was one of Kolmogorov’s greatest insights when he first conceived of K complexity. It’s known as The Invariance Theorem, and it roughly states that if you can program a piece of code in one valid programming language, then for only a limited amount of extra overhead you can program it in any other programming language.

Basically, there’s a certain list of minimal commands that a valid (the technical term is “Turing Complete”) programming language must contain - like reading and writing to memory - and if it contains all of these commands, then it has the minimum ingredients to program just about anything. The proof is actually quite simple: if your code is in language X and you need to convert it to language Y, just write a compiler for language Y in language X. In other words, use the first language to write a translator that puts all your code into the second language.3

The offshoot of all of this is that as far the theory of K complexity is concerned, the language doesn’t matter. As long as your language’s descriptive ability is above some minimum threshold it’s just as good as any other. But the converse also holds: there is no such thing as a “more powerful” language4.

And that means there really are no loop holes. Bentham’s “ideal” language for God does not and cannot exist, at least not in a mathematically meaningful way, because all languages must be describable by any other Turing complete language. There’s nothing that stops you from inventing a one-command-for-God language, I suppose, but its mere existence wouldn’t endow it with any special power. Either all of its operations could be described just as easily in any other programming language (in which case we gain nothing) or it has operations which can’t be translated (in which its not a language at all, it’s a magical incantation and there’s no reason why we should take it seriously).

Coding God

Now all that’s left is to ask how any of this actually applies to the debate at hand: What does it actually mean to code God or the universe?

Let’s start with “the universe.” Here, it’s pretty easy to describe what we’d need: the code to run a hypothetical exact simulation of the entire universe. Such a simulation - assuming it were possible - would require a hypothetical mega-computer that simulates everything. It could be as big as we need it to be (much larger than the universe, certainly), and allowed to run for as long as we need it to. The only requirement is that the output of our program needs to be an exact description, down to every sub-atomic particle, of exactly how the universe looks at every possible moment up until a pre-defined end point.

Now, to be clear, a simulation the universe may not be mathematically possible; I’m aware of the thin line this argument is walking. If the universe were spatially infinite, for instance, that would be just one of many possible barriers to using conventional computation5. But that’s not really the point. The point is that our ability to write or run this simulation doesn’t really get in the way of our ability to wrap our head around it: it’s just all the laws of physics (known and not-yet-known) starting with the singularity and then playing forward until this moment.

And, assuming that the program itself could be run in finite time, there’s no reason to believe the code would be prohibitively large. All known laws of physics involve only a couple equations that could be written in only a few lines of code. These laws may be hard for our human brains to wrap our heads around and even harder to discover, but they’re all quite simple in terms of K complexity. Yes, you’d obviously need incomprehensibly large amounts of memory and compute power, but our setup already gave us all that for free. The code itself would still be short - the universe would not be especially hard to describe. In many ways, it’s like the fractal mentioned above: you start with a singularity and a bunch of laws, then press play and let the chaos organize itself.

What about God? It’s the same requirement, really - we need to program a simulation of God. That means we need a computer program that not only builds everything that was built by our universe simulation, but does it by first building an all-knowing, all-powerful entity that then uses his powers to create the universe. Remember what I wrote about language above - I’m not asking you to simple write a loop with a line of code that run a function called “do_god_thing().” I’m asking for a step-by-step, piece by piece, completely from-scratch recipe for building God wholesale from nothing. Tell me how to give him thoughts, describe for me not just how he conceives what his will should be but also the exact mathematical mechanisms by which he makes his will come to pass.

I can only imagine how much the average theist must balk at that last paragraph. A lot of what I’d be asking would seem to contradict so many basic notions of how God is traditionally understood - God is presumably infinite. But that’s just the tip of the iceberg. The real problem is that it asks the forbidden question: how does God actually work? How exactly would one build God, if one had the resources to do so?

Now, I can anticipate a few different responses to this request. The first is to claim it cannot be done - that God exists beyond mere computation (and by extension, mathematics), representing something different entirely.

But if that’s your stance, then I think you’ve already lost. Because what you’re telling me is that God is so complex, so beyond all comprehension, that he can’t even be confined by ideas like algorithmic complexity. To me at least, that sounds like you’re calling God infinitely complex. Certainly, he can’t have low complexity if the very notion of complexity does not apply to him. It shouldn’t even take a theory of algorithmic complexity to make this clear: why should I be compelled to believe in an ineffable Godful universe when I’ve got a perfectly effable Godless one right here?

Another option is to just hold onto the idea that the “code for God” is merely some short statement of some generic idea amplified, like “infinite goodness” or “infinite mind.” We already went over this, though, when we talked about the Invariance Theorem. You can go ahead and have an “ideal” language where “God” or “mind” is just a line of code, but that doesn’t mean anything unless you can implement the same code in Python. The math had no loopholes.

Which leaves us with the final option: actually try to consider what an implementation of God would look like. Here I confess - at the risk of being slightly inflammatory - that I secretly believe no theist has ever really done this. Even the most philosophical it seems have spent countless hours questioning if God exists, devising countless existence proofs, pondering endlessly what God could or would do, but with almost no thought to how he would do it, no thought to the cognitive mechanisms behind it all.

But that is what it would take to convince me. I don’t need a full map of the mind of God, I just need reason to believe such a thing was describable, and that description could be complete. Give me a description of God in the spirit of mathematics, make me believe that God is simpler than the universe by proposing a version of God which is comprehensible enough to actually be simple, to at least allow the idea of complexity to apply.

Because I have to say, from my experience, minds are very very complex things. They aren’t something you can fully describe with just a word or two, any more than saying the word “car” gives you any idea how to build a sedan. They are deeply multifaceted, they have pieces and those pieces have pieces. They do different things in different scenarios, they’re dynamic, they reason over things and synthesize information, they construct goals and work towards them.

To build a proper intelligence you need to build in all of that complex decision making and reactive behavior. The closest we as humans have ever come to building minds is modern AI, and for all their deep complexity modern LLMs are only pale shadows of human thought. Think of how astoundingly complex the neural circuitry must be within the human brain; how much code would it take to perfectly simulate such a thing?6 And now consider that what ever an LLM is to a human, God would be ten million million times more than that. How could such vastness be simple?

Consider: for anything that happens in the universe, God decided to make it happen; there was computation required not just to do the thing but also to first think about doing the thing. God must also have considered alternatives, of which there are potentially infinite.

And once you had this entire description, you would still need to convince me that it’s not much larger than just writing out all the laws of physics, most of which reduce to fairly compact equations. I don’t think it can be done.

Final Thoughts

I’ve found that to many theists the very act of trying to understand God seems to contradict the entire notion of God. To them, I think a lot of the things I’m asking for may seem inherently out-of-bounds; one simply does not ask for a description of how God functions, it contradicts his mysterious and incomprehensible nature.

Not including a couple insufferable years in college, this sort of stance hasn’t really ever bothered me when it’s come from a non-philosopher; as an atheist I tend to believe very little in life hinges on whether God is actually real.7

But from philosophers at least I’ve always expected a little more. Where God’s machinations were concerned, I always felt that theistic philosophers were too ready to declare huge swatches of idea space out of bounds. So in that sense, this post is a bit of a challenge to the theists: prove me wrong. Show me that you’re willing to roll up your sleeves and get dirty, that you can ask the hard questions about God and actually come back with answers, questions like where god came from, or how he actually works.

Because until you’re willing to grapple meaningfully with those questions, you’ll never be able to convince someone who already has.

When writing for a non-technical audience I often like to say approximately-correct-but-technically-wrong things in my writing to make ideas clearer, and then clarify the technical points in a footnote. In this case, I feel compelled to point out that no one actually knows the K complexity of ChatGPT or any other LLM, so in theory it could possibly be low, but realistically it’s probably not much lower than a (lightly compressed) list of the model’s many parameters.

Modulo things like hardware failures and race conditions and what have you. But we’re all grown-ups here so I won’t waste time explaining why that doesn’t matter for this theoretical discussion.

The reason we don’t care about this extra conversion code is that it depends only on the language, not the program actually being run, so it isn’t effecting the complexity of whatever you’re trying to build.

Technicality: the Church Turing Thesis could be false and there could be models of computation that can solve problems that Turing machines can’t. But that almost certainly isn’t the case, and unless you’ve got some Turing Award-worthy insight to share, that’s not a line of argument I’d recommend pursuing. And even if the Church-Turing Thesis were false, that doesn’t mean it would automatically have implications for the truth of God.

I see at least three potential technical issues with simulating the universe assuming it were finite, though there are almost certainly others I’m missing. Because they’re very technical, I’m relegating them here to a footnote. But they do imply some interesting connections.

The first problem is our choice of numerical precision. Obviously we’ll never have an exact match of our universe since our computation will need to be discrete, but it’s not unreasonable to ask that we compute everything to some desired degree of accuracy and that this degree holds for all the timesteps we simulate.

Thus, since our simulation’s space and time complexities are allowed to be unbounded, ill-conditioning won’t be an issue because we can select an arbitrarily high precision that reduces error to an arbitrarily small size. The only issue is that we may have constants, like the speed of light, that we can’t derive from scratch using any computation and will therefore need to encode explicitly, adding arbitrary amounts of extra complexity. But this is actually just a restatement of a well-known philosophical issue: the fine-tuning problem. And the solution is the same: the extra complexity goes away if, instead of modeling a single universe, we instead model a multiverse. Now the arbitrarily complex universal constants can be replaced with a single loop that iterates over all possible constant values and simulates a new universe for each one, adding only negligible extra complexity. If there are multi-verses for all possible values, then that’s obviously uncountable and we’d miss any given universe with probability 1, but there’s only so much we can expect of a finite simulation. The multiverse approach also covers all initial conditions, as long as the starting configuration of our universe is in the set we’re iterating over.

The second problem is quantum randomness. Because all quantum interactions are probabilistic in nature and appear to be genuinely random (as opposed to merely pseudo-random), this naively suggests that we might need to add massive extra complexity to specify the random outcomes of all measurements. Like the previous issue, however, this turns out to map nicely to a mainstream philosophical problem: the appropriate interpretation of quantum mechanics. And, similar to the multiverse solution above, we can eliminate effectively all the extra complexity by adopting the many-worlds interpretation and simulating all worlds simultaneously. I’m sure I’m not the first to discover this, but this makes Kolmogrov complexity a pretty interesting piece of evidence for both the many-worlds interpretation and the multiverse hypothesis, since in both cases we are radically increasing the size of reality while radically reducing its actual complexity (as measured by K complexity).

The third problem, however, is how we define a timestep in our simulation. The issue that I see is that relativity tells us that time passes differently in different reference frames. Here, I confess that I simply don’t know the answer. I’m not familiar enough with relativity to know how easy it would to create a “simulation clock” with discrete time steps of sufficiently small size; maybe a simulation would be impossible. Still, I would be very surprised if no solution could exist, since time still moves forward in all frames. In any case, relativity clearly exists whether or not God is real, so the solution to this problem doesn’t affect anything else in our discussion. If anyone is an expert on the subject, I’d love to get your thoughts.

Remember that a brain is not created in a vacuum. All that “training data” needs to be part of the code.

If I’m correct about athiesm, anyway. If the theists are right then I suppose quite a lot hinges on it.

While I am very sympathetic to Onid's argument here, ultimately I'm not sure its a very good counter to theists. They will simply deny that such mathematical or computational approaches are applicable to God. In particular, there is no proof that everything that exists is computable, so they can simply deny the completness of Turing computation. They can then define an alternative notion of simplicity based on the number of novel substances or properties they need to postulate for God to exist (or whatever other claim they want to make). I think this approach is hand-wavy and unconving, but I don't think you can refute it by pointing out that it is inconsistent with Kolmogorov complexity. They'll simply agree and ask why they should care about that.

Excellent. Thank you for this! In my view, theists’ reluctance to grapple with these questions is evidence that their primary strategy for arguing for god is essentially an unserious appeal to magic. They raise their hands in exasperation, “It’s all so complicated and improbable! Why is all of this crazy world this way and not some other way?! I know, god did it! He can do anything, by definition. Phew, mystery solved. Don’t ask me how he did it; he’s infinitely mysterious and beyond all comprehension or reason!” God to them is a “magical incantation” (as you put it) to “make sense” of any and all phenomena. Of course, almost by definition, making sense of things by attributing them to magical, not-understood entities is not actually to make sense of them at all, as doing so increases understanding in no substantial way whatsoever.